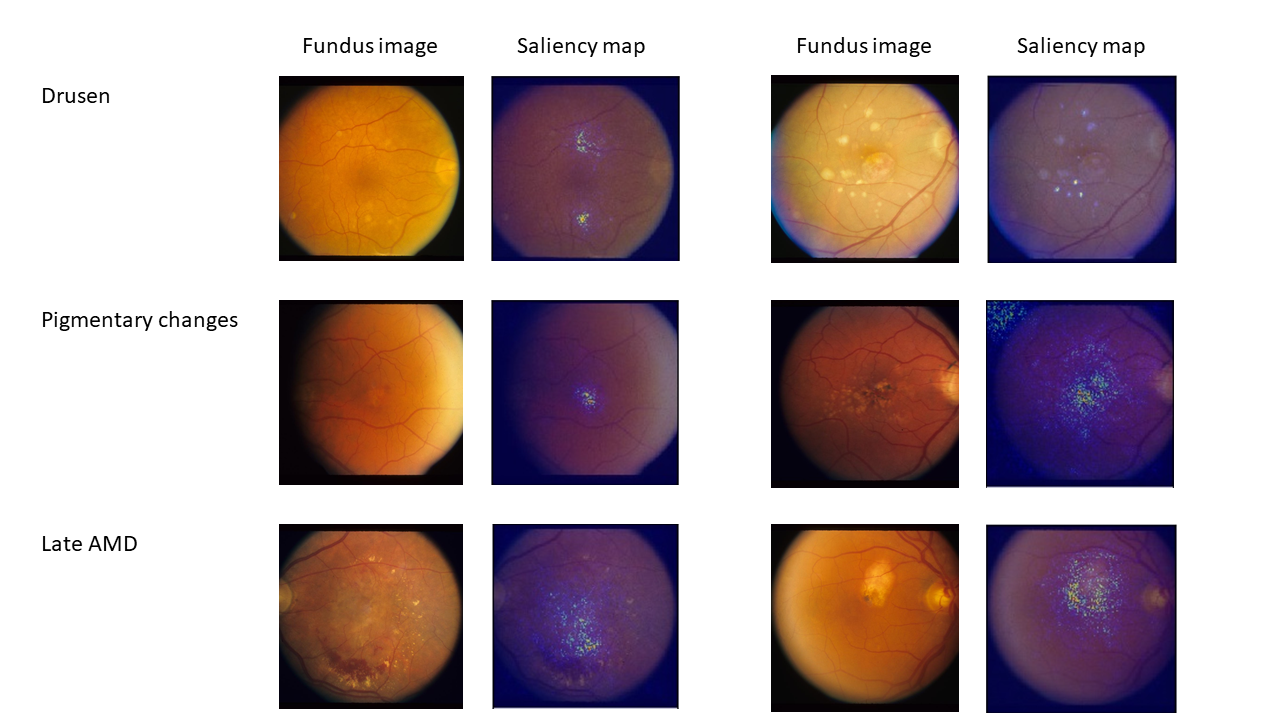

We developed a deep learning framework that can classify retinal color fundus photographs into a 6 class patient-based age-related macular degeneration (AMD) severity score at a level that exceeds retinal specialists.

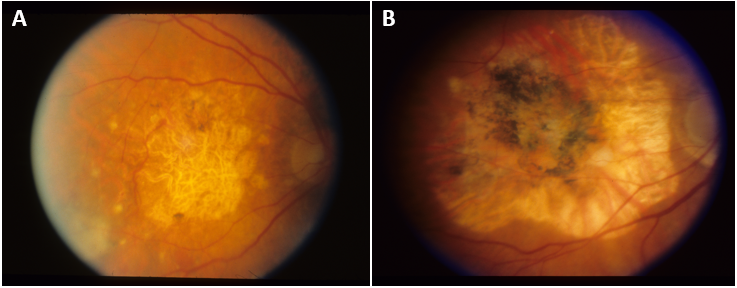

Age-related macular degeneration (AMD) is the leading cause of incurable blindness worldwide in people over the age of 65. The Age-Related Eye Disease Study (AREDS) Simplified Severity Scale uses two risk factors found in color fundus photographs (drusen and pigmentary abnormalities) to provide convenient risk categories for the development of late AMD. However, manual assignment can still be time consuming, expensive, and requires domain expertise.

Our model, DeepSeeNet, mimics the human grading process by first detecting risk factors for each eye (large drusen and pigmentary abnormalities) and subsequently calculates patient-based AMD severity scores. DeepSeeNet was trained and validated on 59,302 color fundus photographs from 4,549 participants.